Launch of our new report on artificial intelligence in safety related systems

Published: Wed 25 May 2022

Published: Wed 25 May 2022

Artificial intelligence (AI) is an enabling technology for autonomous systems.

Its use in safety-critical product development is increasing significantly and delivering benefits for users.

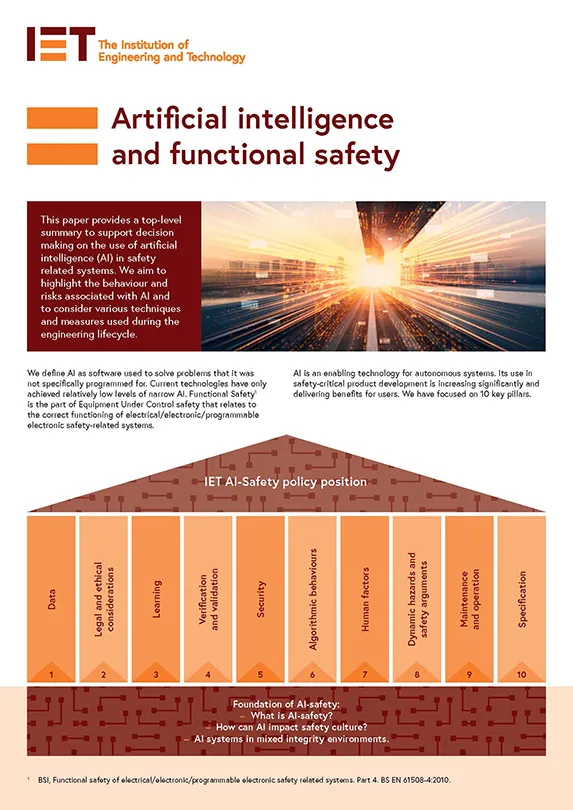

Functional safety is the part of Equipment Under Control safety that relates to the correct functioning of electrical/programmable electronic safety-related systems.

There are risks and various techniques and measures that need to be considered when applying artificial intelligence in the engineering life cycle.

We have focused on 10 key pillars:

This paper is the first in a series of IET outputs on this topic. A more detailed document is currently being developed and will be published shortly.

To provide feedback on this paper, please contact us at policy@theiet.org.